Project by Marta Verde.

In Gamboa, I felt really astonished by the gorgeous nature that surrounded us.

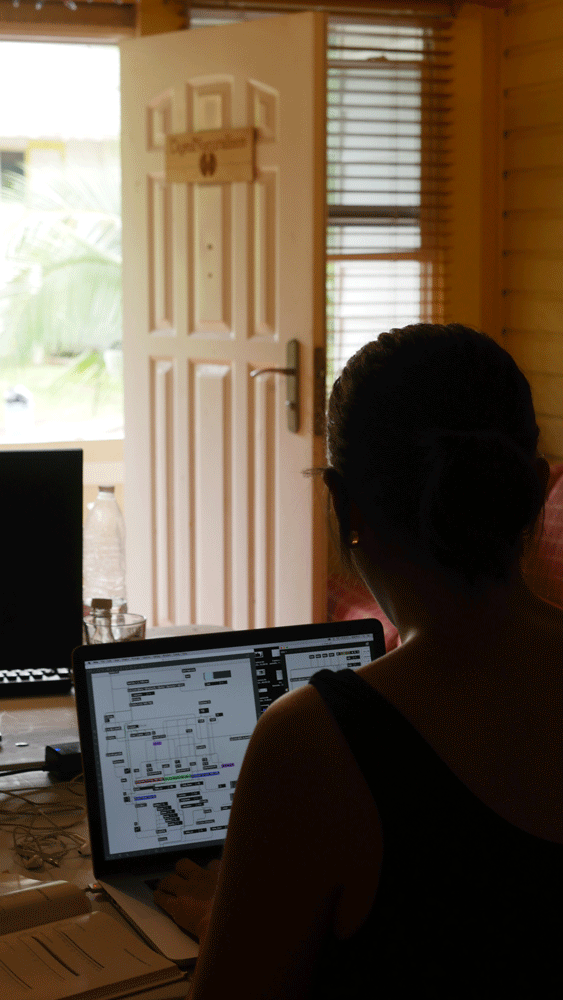

I’m a creative coder and visual artist. I mostly work with realtime graphics in live performances with musicians, dancers or other artists. I also create visual-based interactive installations.

My intention was to transform what we don’t see, into viewable data, inspired by the unique and exotic rainforest vegetation in Gamboa.

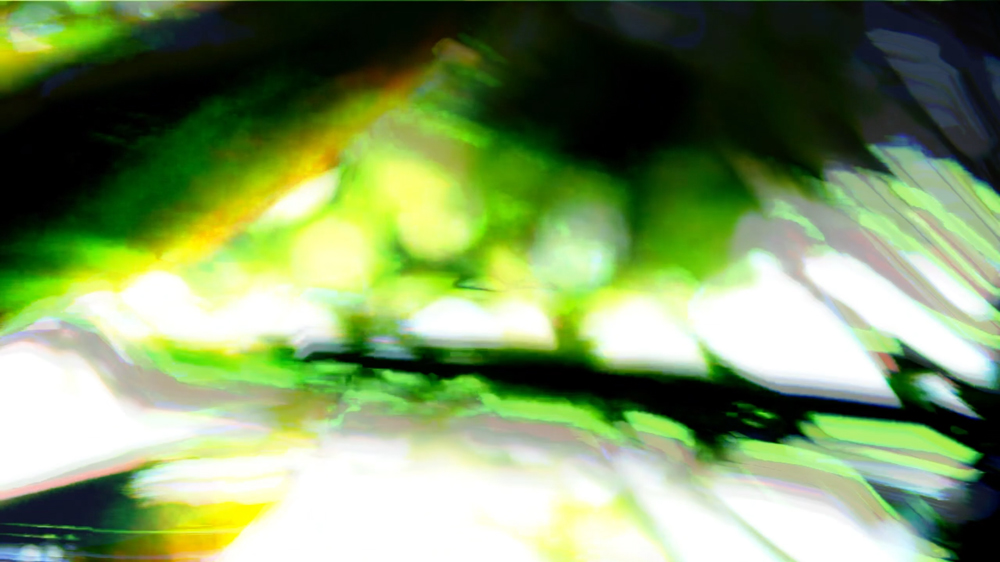

I used the small movements of leaves and other natural elements moved by the wind and rain as external factors to reveal themselves. Nature can be a painter helped by digital techniques (although plants don’t really “move”); prompting its own memories, the accumulation of movement into textures. Also the behavior of light between matter is valuable for this work.

The tool I used to generate this images, was Max/MSP. I created a program that modified the video (the first two days in Gamboa I recorded a lot of video footage) in realtime, having timed random changes that modified certain parameters, allowing me to obtain happy unexpected results.

The expected result was an A/V piece generated in realtime, using the own video sound also modified, but, it ended up being single beautiful still screenshots (among the videos I captured also as documentation), something I loved because they look like real paintings.

First, gather input materials:

- Record video footage of palms leaves moving, flowers, water…anything moved along the wind and rain.

- Select small loops and export them to a smaller resolution (1280×720) and best performance codec using ffmpeg :

/usr/local/bin/ffmpeg -i originalvideopath.mov -s 1280×720 -b 1000k -ab 128k convertedvideopath.mp4

Then, I built the patch (program) in Max/MSP . Is a software meant to code programs, but node-based.

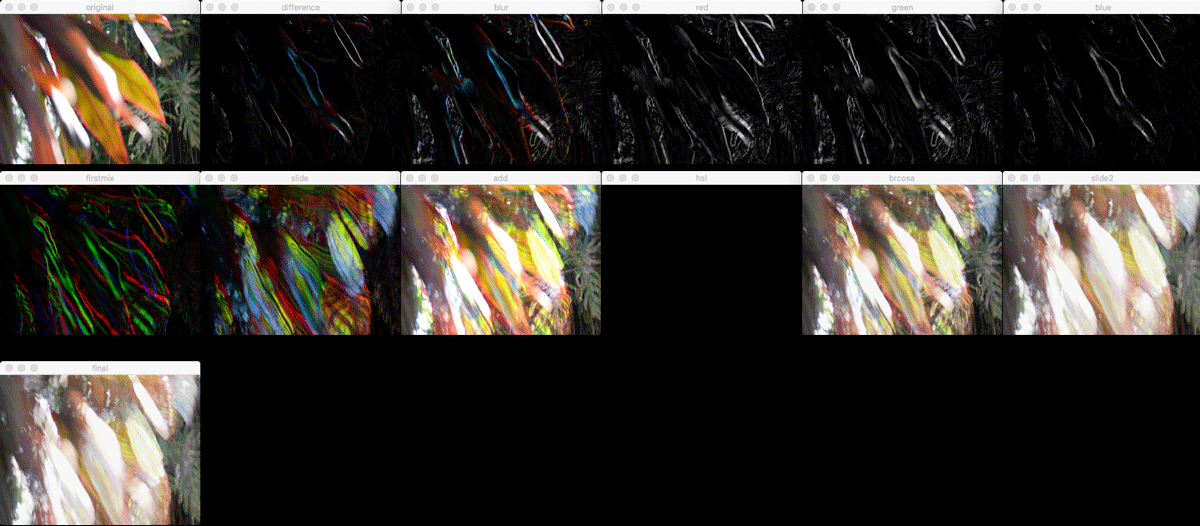

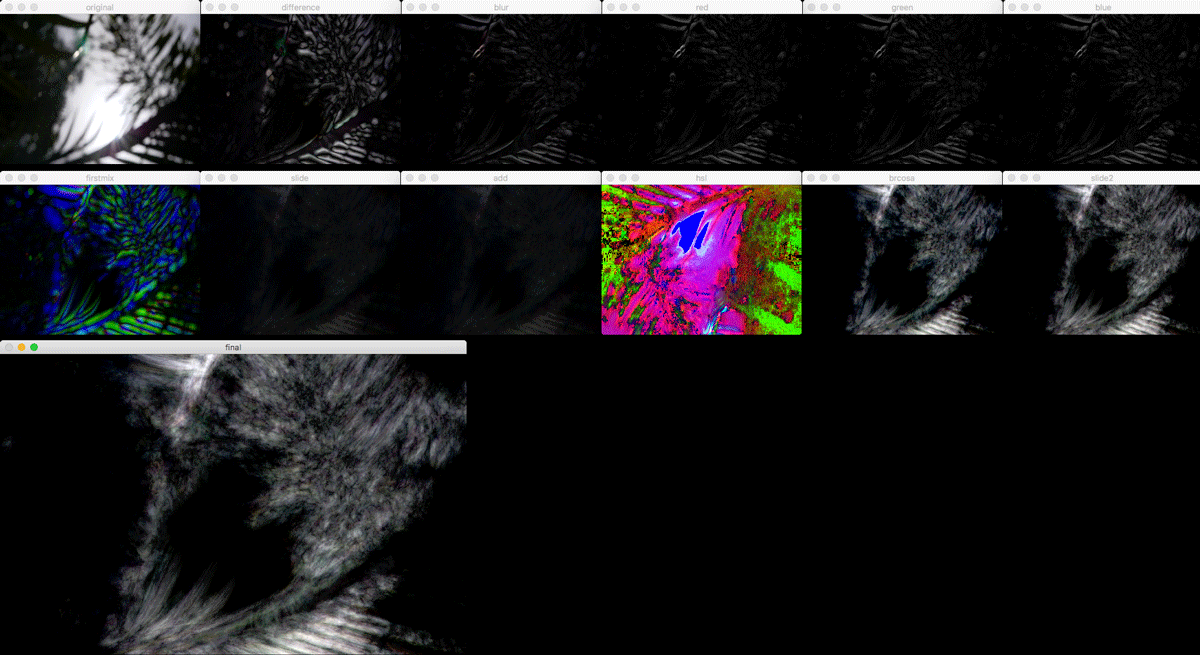

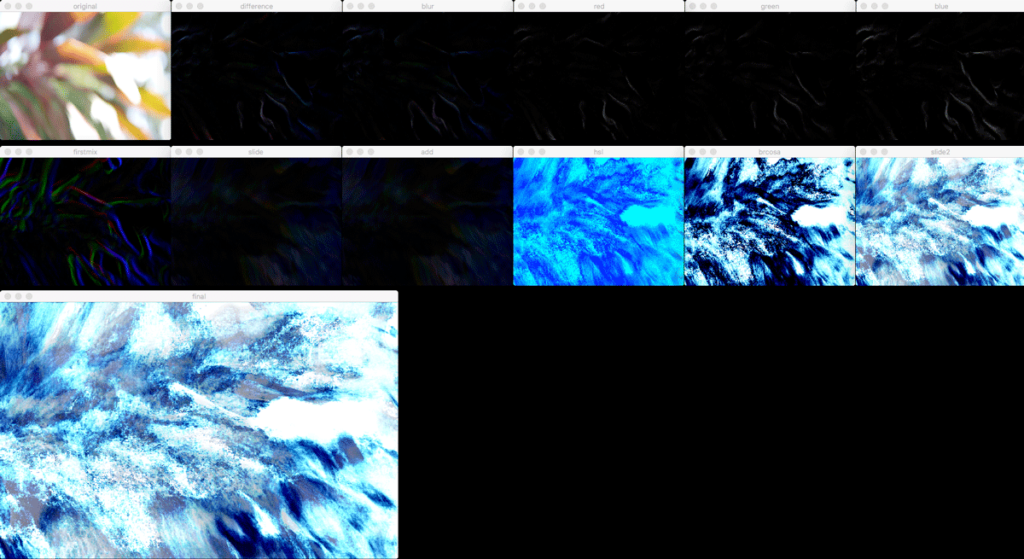

The process was about to chain alterations on the original video, with the goal of reach different outputs in realtime:

- Speed playback

- Frame Differencing

- Blur

- Separate each color channel (ARGB)

- Join that channels with different delay times

- Pixel-by-pixel slewing of video frames (first)

- Blending in add mode of this result with the original video

- Change color mode to HSL to achieve fancy glitchy effects (ON/OFF)

- Basic contrast/brightness/saturation control

- Pixel-by-pixel slewing of video frames (second)

I set up some “presets”, because each video worked fine with different parameters. I also added some randomness, each certain amount of time, the video feed, speed, orientation and other basic parameters were randomly changed, giving life and that happy unexpected results.

The most important and first one is the frame differencing, is a technique where the computer checks the difference between two video frames. If the pixels. have changed there apparently was something changing in the image (moving for example). Most techniques work with some blur and threshold, to distinct real movement from noise.

Some screenshots viewing all the process, each link of the chain in a separate window:

The final chosen frames:

And some video where that screenshots came from:

Special thanks to all the people I met at Dinacon, specially my team-mate-travellers Anna Carreras and Mónica Rikic <3