Subversive Submersibles, water-adapted augmented reality

Oya Damla, Kira deCoudres, Adam Zaretsky, Ryan Cotham

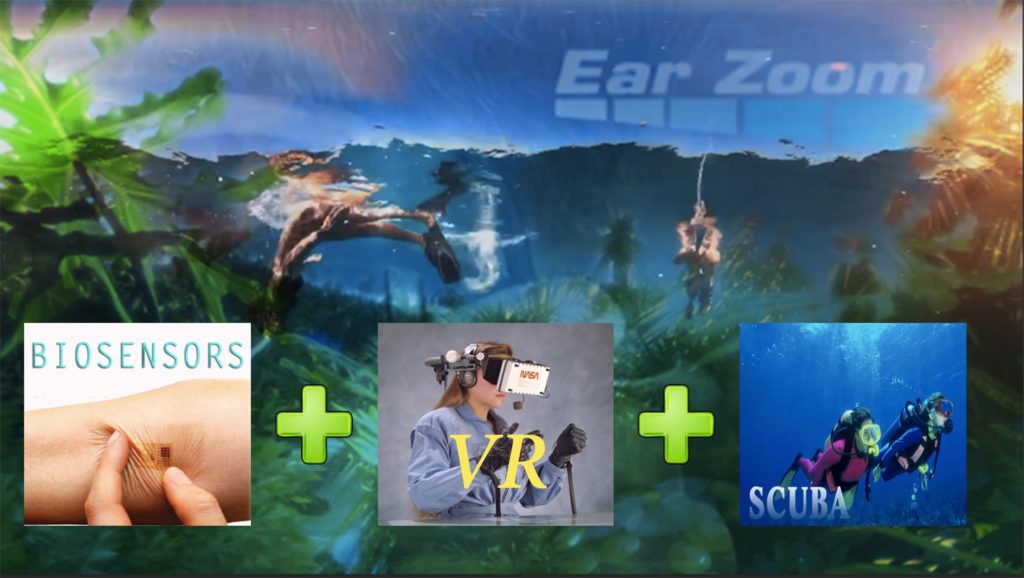

Abstract – ImmerSea: Subversive Submersibles is water-adapted augmented reality (AR). This included aquatic AR goggles, immersive AR environments and AR submergibles.

ImmerSea: Subversive Submersibles are installation experimentations involving creative real time superimposition of re-mashed audio visual overlays onto everyday audio-visual and other sense data for experience alteration and tabulation of reactions. The experiments focused on (1) Eye Candy Disruptors, (2) EcoSensual Synesthesia and (3) Gamification of Risk. Centered around our pop-up sustainable miniature golf course, we designed transmissions and communicated odd augmentations to our immersed and submerged co-artists in the Andaman Sea.

Field Report: IMMERSEA: SUBVERSIVE SUBMERSIBLES DETAILS

We had wonderful experiences doubling our sound and vision under the sea. Kira, Oya, Adam and Ryan formed a temporary collective of multimedia artists, bioartists, psycho-geographers, biologists and free thinkers who collaborated in the ImmerSea: Subversive Submersibles node at the Digital Naturalism Conference. We manipulated live feed footage from environmental settings. We designed and built submerged interactive/immersive sound and video wearables for architecturally encasing art installations using site specific sonic and tactile sources. We ran experiments in psychological experience and altered states of semi-consciousness. We dedicated our time on the island to rugged prototyping allowing for flexibility that utilized the ready-made technics we brought, borrowed and found. We incorporated naturally occurring sounds found in the acoustic environment of Koh Lon and build them into our novel device laden suits.

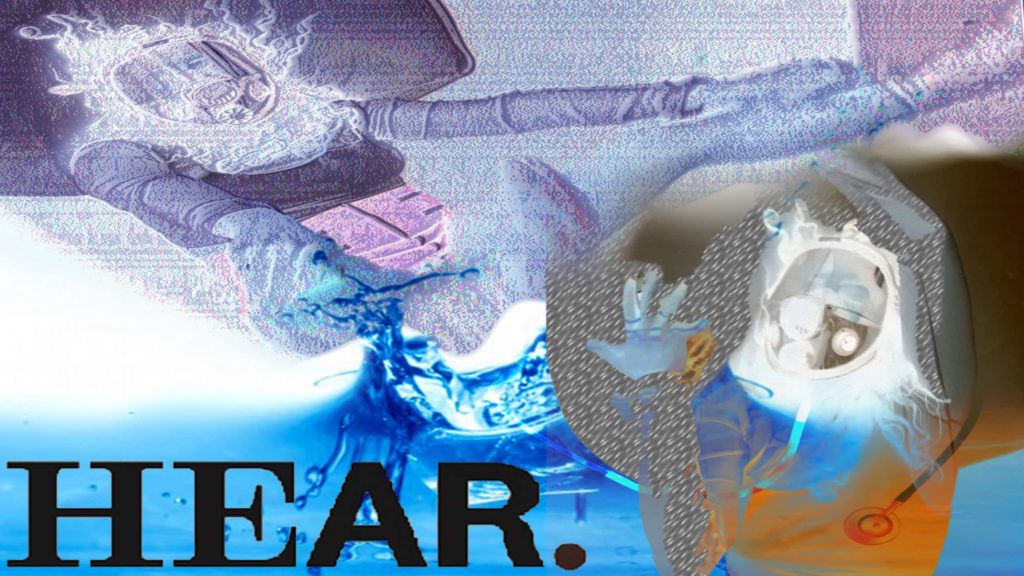

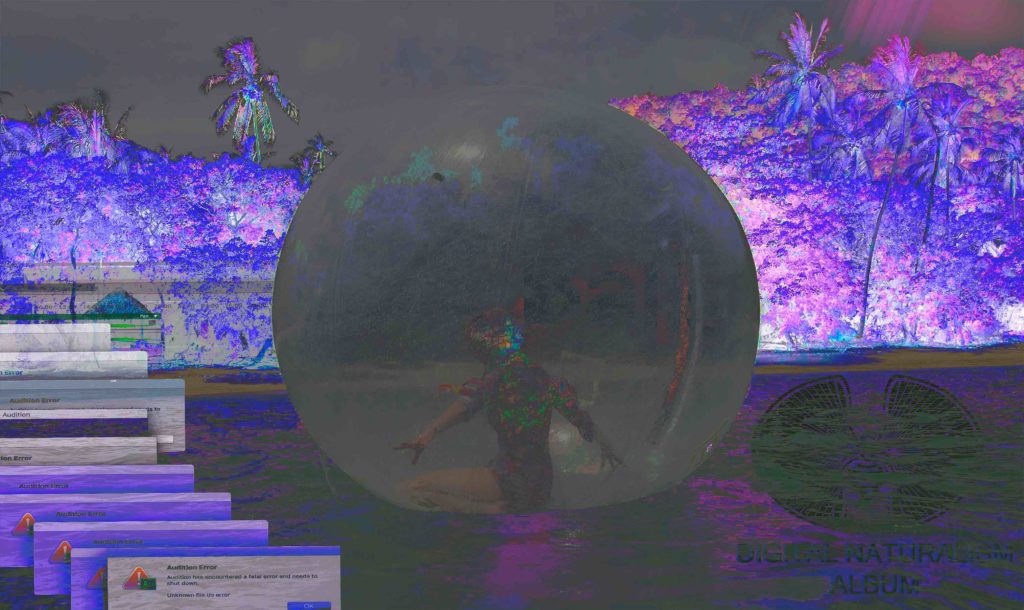

In the mists of a lush tropical island environment, we composed six channel, ‘real’ surround sound for zorb ball immersive experiences and Video Jockey creative misuse of AR software explorations for a hand crafted underwater iPad floatie suit. We detuned, crashed out and mashed up with our sound art, performance art and mad interdisciplinary sciart skillz in the DinaCon Cone of Tropical Geekdom (DCCTG). Underscoring our critical quandary into behavioral, cognitive and queer studies, while relying on our own brand of fringe philosophy, we unpack for you here our experimental designs and the resultant psychoacoustic/psychogeographical analysis of augmented underwater experience.

Yes, we designed submerged aquatic AR environments and underwater AR submergible wearables. ImmerSea: Subversive Submersibles made installations of DIY bio-body art experimentation by building mediated exoskeletons and getting in them, in the water. We were receiving creative, real-time and re-mashed audiovisual overlays while in and on the sea. We had that experience of alteration that mocks, and yet joins in, screen hypnosis through gadget love/hate relations. In this field report we include our own qualitative tabulation of reactions from the audio visual occlusion front of mixed reality superimposition. Working from the vantage points of artistic self-experimentation, tech-no-masochism and begrudgingly admitted tech-titillation, the following is our lab book.

What is Hydro Immersive Augmented Reality (HIAR) to Us?

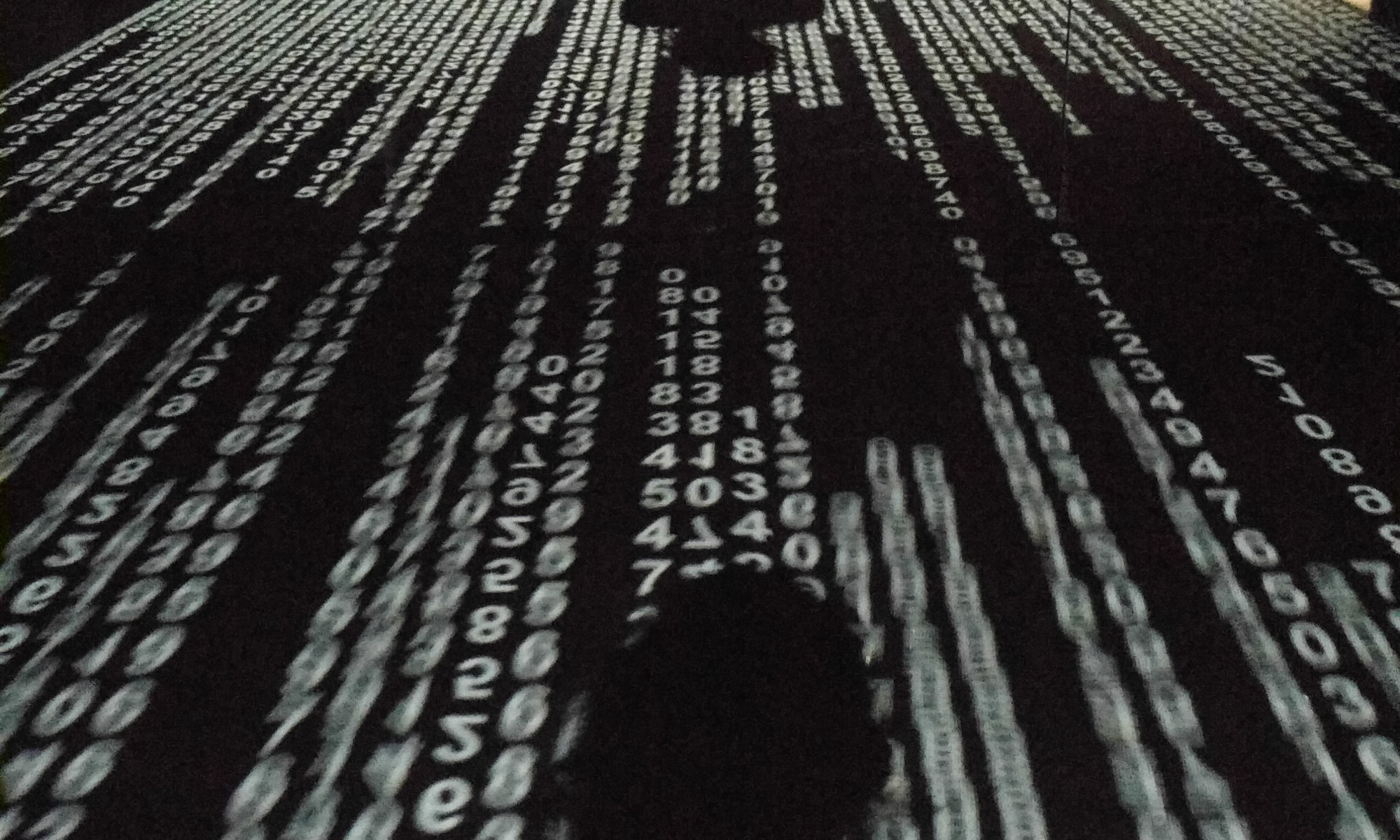

Augmented Reality is a super-imposition of digital media onto the natural world for artificial intimacy. We study Computer aided User Deprogramming Experience (UDX) as well as push-advertisement styled media coersion. Is immersion in the sea a way towards more easily abscessed critique of a common mass obsession? Due to the changing state of the aqueous mind, an undersea, layered-on, disruptor of immersive relaxation may be the ticket towards a reveal of the prosthesis between the blinds of both inner and outer worlds. In other words, we theorize that Underwater AR may provide evidence of cognitive glitch implicit in consciousness (i.e. equating the needless stressor blow out anomie to the thrill of mediated stim seduction excess). Does the fall of alienation through a leisurely addition of social entertainment screens open us to gamified virtual vacation communication as utter surrender?

“We lived once in a world where the realm of the imaginary was governed by the mirror, by dividing one into two, by theatre, by otherness and alienation. Today that realm is the realm of the screen, of interfaces and duplication, of contiguity and networks. All our machines are screens, and the interactivity of humans has been replaced by the interactivity of screens. … We draw ever closer to the surface of the screen; our gaze is, as it were, strewn across the image. We no longer have the spectator’s distance from the stage — all theatrical conventions are gone. That we fall so easily into the screen’s coma of the imagination is due to the fact that the screen presents a perpetual void that we are invited to fill. Proxemics of images: promiscuity of images: tactile pornography of images. Yet the image is always light years away. It is invariably a tele-image — an image located at a very special kind of distance which can only be described as unbridgeable by the body. … There is no ambiguity in the traditional relationship between man and machine: the worker is always, in a way, a stranger to the machine he operates, and alienated by it. But at least he retains the precious status of alienated man. The new technologies, with their new machines, new images and interactive screens, do not alienate me. Rather, they form an integrated circuit with me.”

– From Xeorox and Infinity, Jean Baudrillard / Translated by James Benedict

This essay was originally published as part of Jean Baudrillard’s “La transparence du mal: Essai sur les phénomènes extrèmes” (1990), translated into English in 1993 as “The Transparency of Evil: Essays on Extreme Phenomena”, http://insomnia.ac/essays/xerox_and_infinity/

That the near entirety of living human animal populations could be bought off so succinctly with a two thumbed, LCD touch sensitive, tool-being hand to eye sized bauble that bleeps intermittent rewards is a tribute to the era of Homo Rechargerus and the addictive nature of conditioning. Regardless of however trite the reward (the bleep for bleep’s sake or the glow brightness itself as iHearth), regardless how onerous the odds, regardless of how guaranteed the eventual amnesiac economy of loss, this is tech that offers satiation on the installment plan and hence satisfaction for many.

Simply by cataloging the percentage of time spent recharging our handhelds we can tabulate the incremental toll, the hemorrhagic loss of service that our screens take. The moth-like to the light of the screen identity is a relic of the TV years. Identity has now gone beyond the CRT beam hook into the infinite line of LCD glow scrolling (touch sensitive screen) and the audio intermittent reward of Pavlovian ring tones and alerts. The ‘you’ve got mail’ instaSnap Limited Interactivity Extended Reality (ISLIXR) of AR superimposition is the clicker training of the commons.

How and Why are We Using AR?

This project engages with topics of behavioral immersion by connecting environmental and experiential bio-data-tics across human, animal, and technological populations. Here, media manipulation serves to make post-environmental and post-biological post-truth information experientially available, stimulating curiosity and interest previously inaccessible to post-programmed populations (the human, post-human and a-human organismic masses). Initializing User Deprogramming Experience (UDX) for Mashup Remix Mixed Reality (MRMR), we present third or fourth level irony in a time where Kitsch has fallen flat. The layers of bullshit detectable have upped the delayed ante up on Critique. We are still trying to favir pop-regurg-a-purge hypnogogic options to standard immersion. Into which product orientation seminars shall we build satire? What exactly has been left un-gamified? What target has the naturalism to uncover, to reveal, as opposed to make appear less world wide cobwebby?

Our three experimental designs are titled: Eye Candy Disruptor, EcoSensual Synesthesia and Gamification of Risk. This report includes the materials, methods and results of these three experiments. It is our hope, in the spirit of the Digital Naturalism Open Source Public Lab Aesthetics (DINAOSPLA), that the art over data ratio (A/d) will be equal or greater than one. This is of course plus or minus a 3% negsanguineously margin of error for the duration of our unstill life studies.

Our three experimental designs are titled: Eye Candy Disruptor, EcoSensual Synesthesia and Gamification of Risk. This report includes the materials, methods and results of these three experiments. It is our hope, in the spirit of the Digital Naturalism Open Source Public Lab Aesthetics (DINAOSPLA), that the art over data ratio (A/d) will be equal or greater than one. This is of course plus or minus a 3% negsanguineously margin of error for the duration of our unstill life studies.

Immersea Experiment One: Eye Candy Disruptors

Experiment One: Eye Candy Disruptor

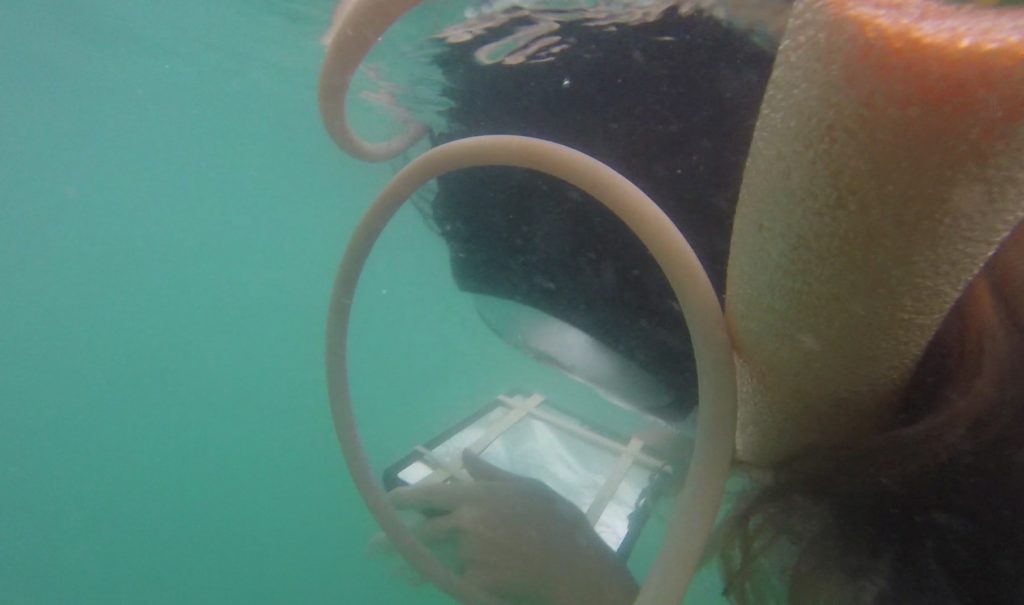

Goals: Through the mediatization of audiovisual fields with Live VJ Blipvert AR in a wearable AR snorkeling suit, we monitor Exposure Therapy to virtual/physical superimposition. The attempt to approach some semblance of mediated saturation, beyond both utility and entertainment was our goal. We were able to modulate variables of visual time alterity and applied repetition media regimes. This confirms with our intention to study the effects of the artistic taking over of the augmented visual and audio field with more Crap than is already available while swimming in the open sea.

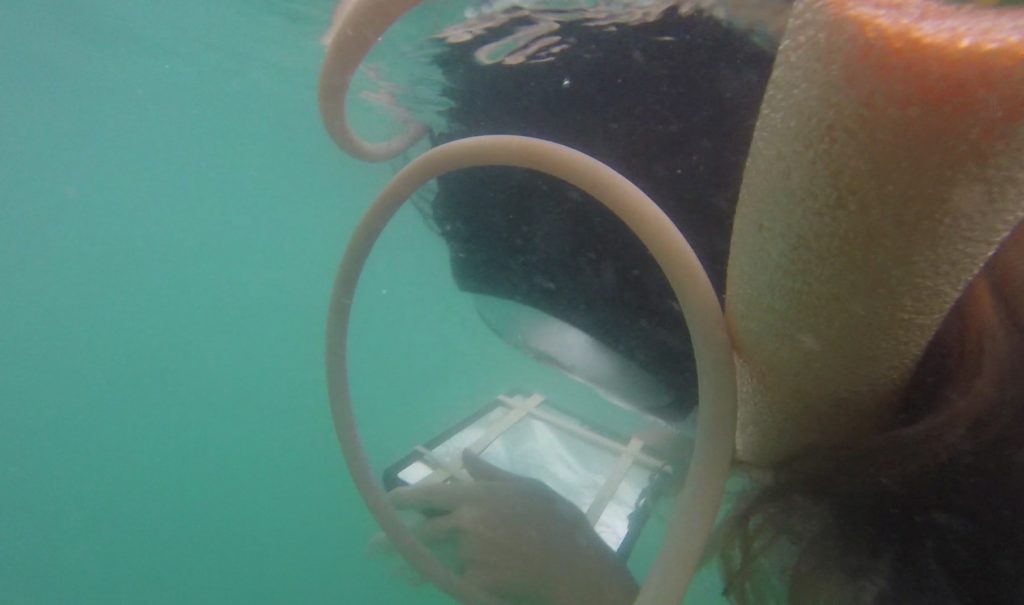

Materials: DIY Water Proof iPad, wearable AR snorkeling suit

Methods: Much of our ‘data’ is based on pre-present & post surveys of human performance volunteers focusing on Annoyance, Habituation and Recall as quantitative, qualitative and catatonicative data sets. Qualitatively, we used an all-orificial data set including questionnaires for tabulation. This entails a synopsis of amalgamated qualitative data from all 11-13 of the major collection points or body portals of our voluntary human arts subjects. This survey data was compared to motion analysis of body language tracking video documentation. Use of binaural new age meditation audio with 31% translucent clouds as a control was meant to approximate degree zero samsara experiential authenticity in order to compare the subject reactions to experiential, repetitive, strobic, vaporwavicle, remash noise ratios.

Results: People had fun! People clamored to be inside the contraption. People craved to swim with a screen in their face, to snorkel in the most lovely sea while looking through a busily augmented screen full of touch sensitive 3d emojis[1] and first person zombies[2] to shoot and three dimensional glitter drawings tracked by space and head movement[3]. The idea was that snorkeling through schools of fish or peeking at anenomes in coral reefs would be reason enough to dispose of the screen. We were wrong, most people want to augment and peer through the screen as often as they can. Instead of non-virtual fidelity, celibate edutainment is the norm.

Future Research: We hope future experiments will include real-time monitored reactions to live blipvert wireless VJ remashed madvertisments. Superimpose motion tracked data collection with specific experimental brain wave stimulating binaural-sextanaural new age automated samsara control variables, and we may score that mind control research grant we always dreamed of. The plan for now is to expand our repertoire into a wider manipulative media range through: percentage of audiovisual augmentation (loudness and field of superimposed vision), speed of editing-jumpcutting, strobic/distorted sonic and signal to noise ratios. should be automated to increase whole brain de-synchronicity and imprint vulnerability. We are still looking for something like real-time giphy world 3D sculpt brush AR jockey software for beaming real time to others in the underwater AR screen spectator faceplant worlding. Perhaps that is something we could seek collaboration for building.

Immersea Experiment Two: EcoSensual Synesthesia

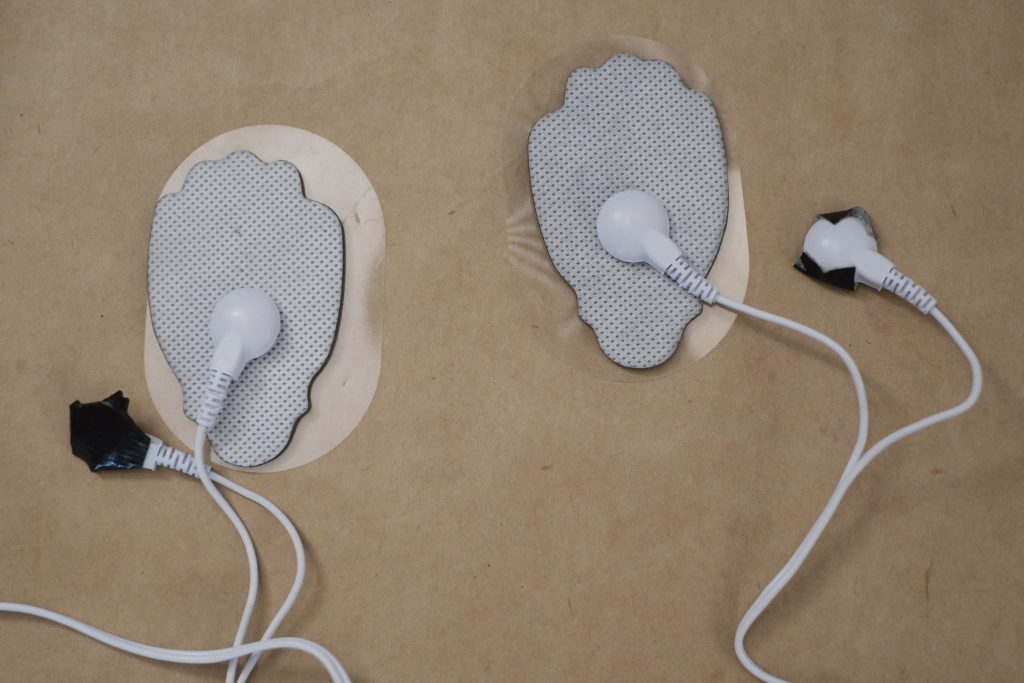

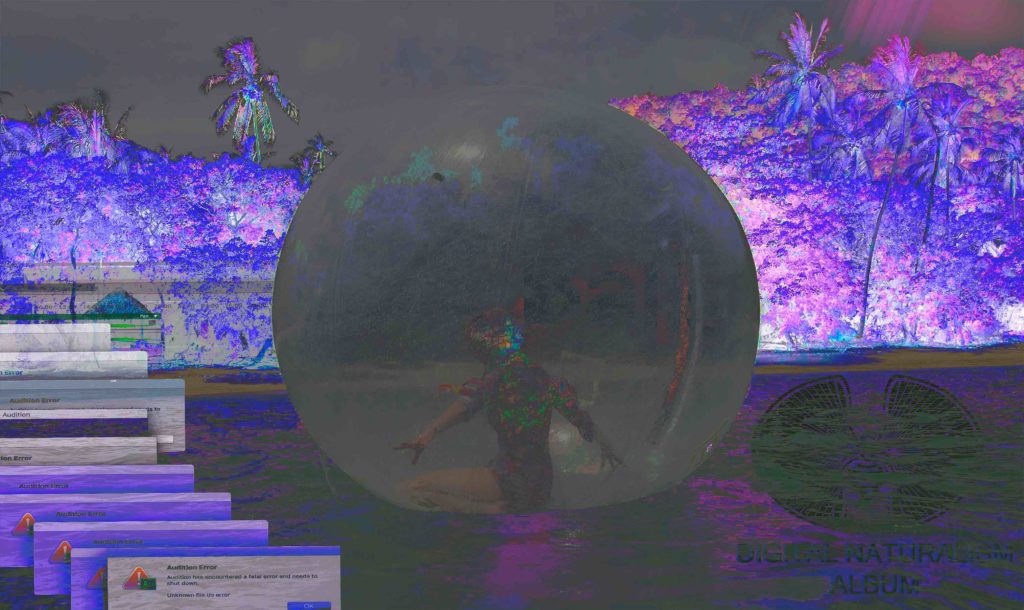

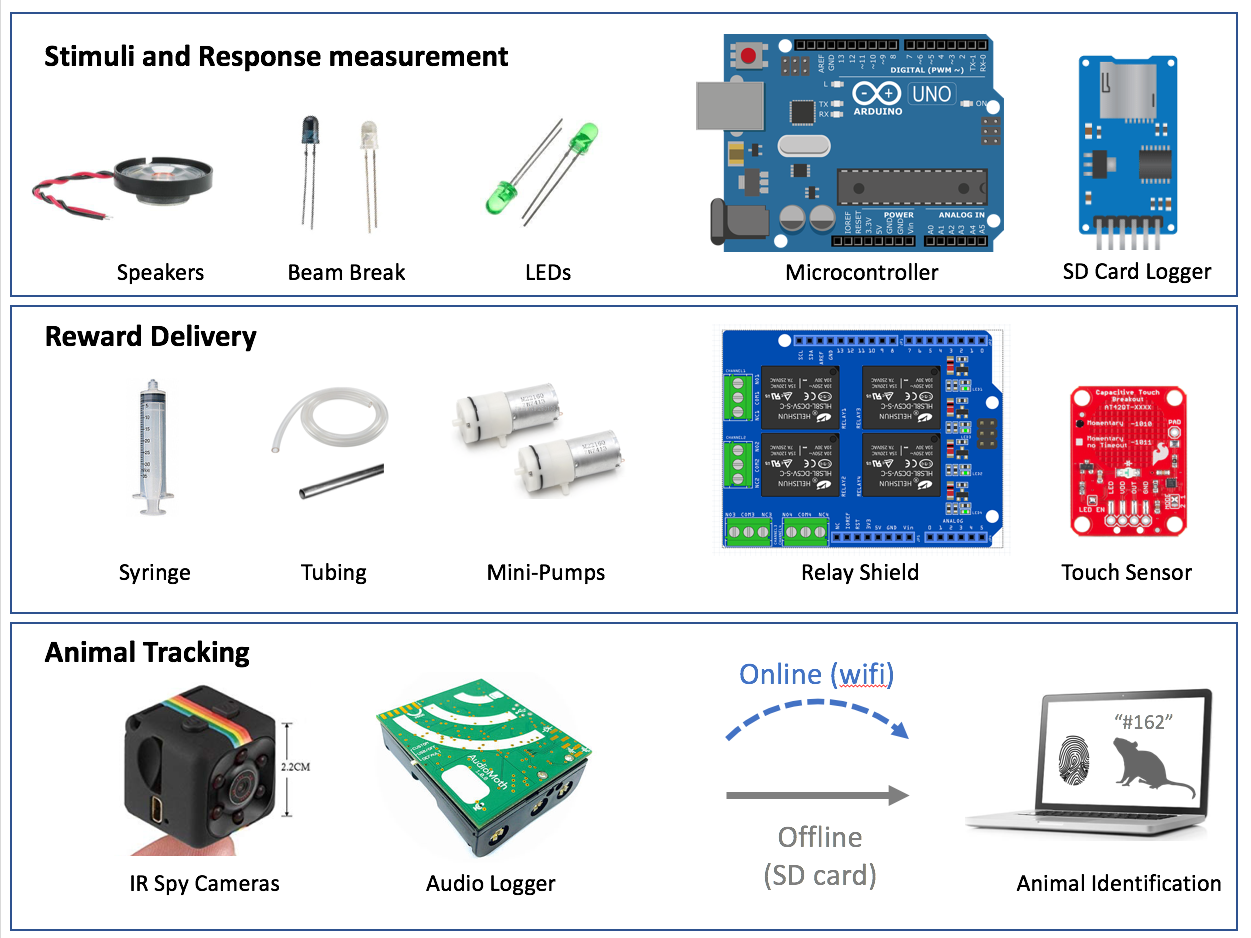

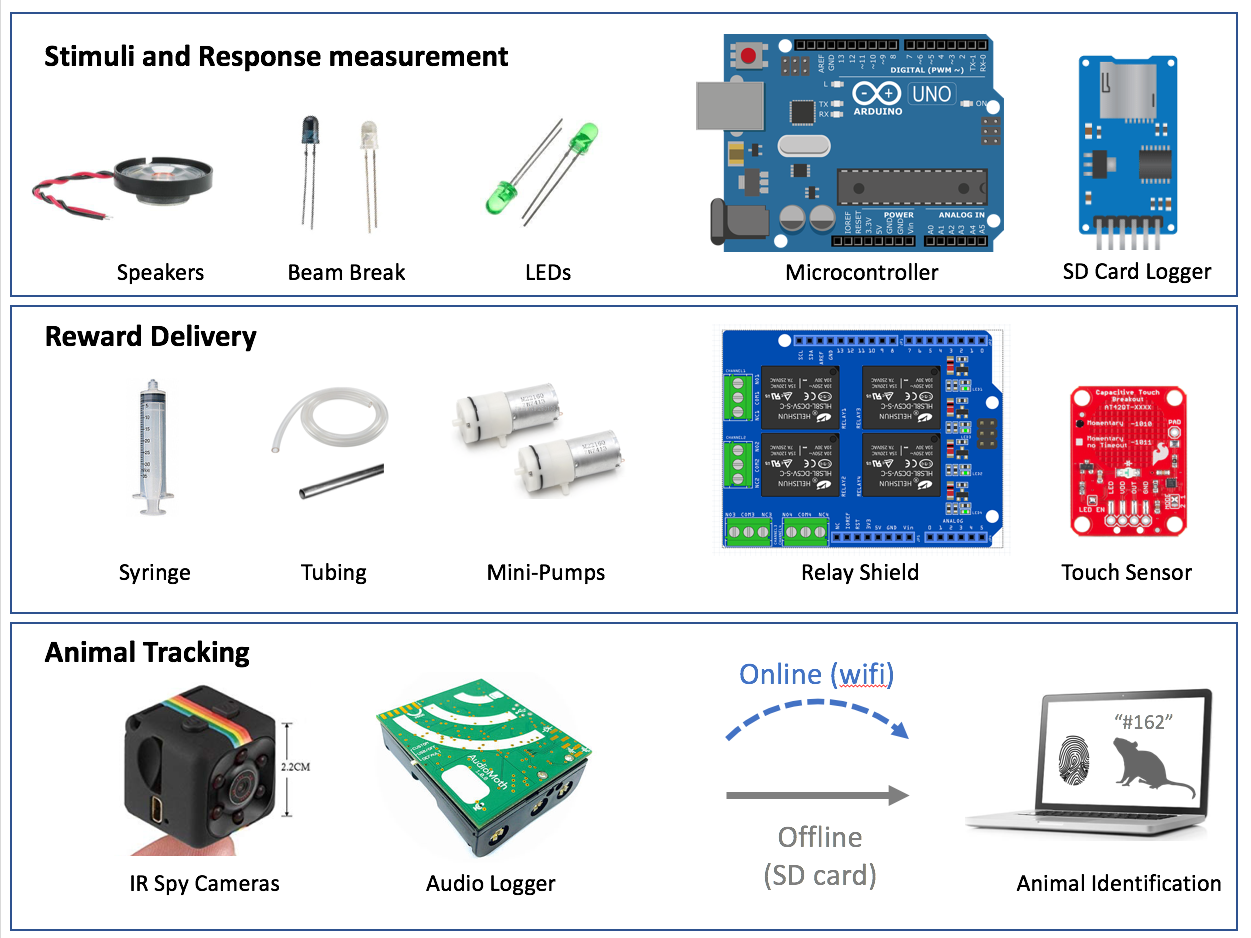

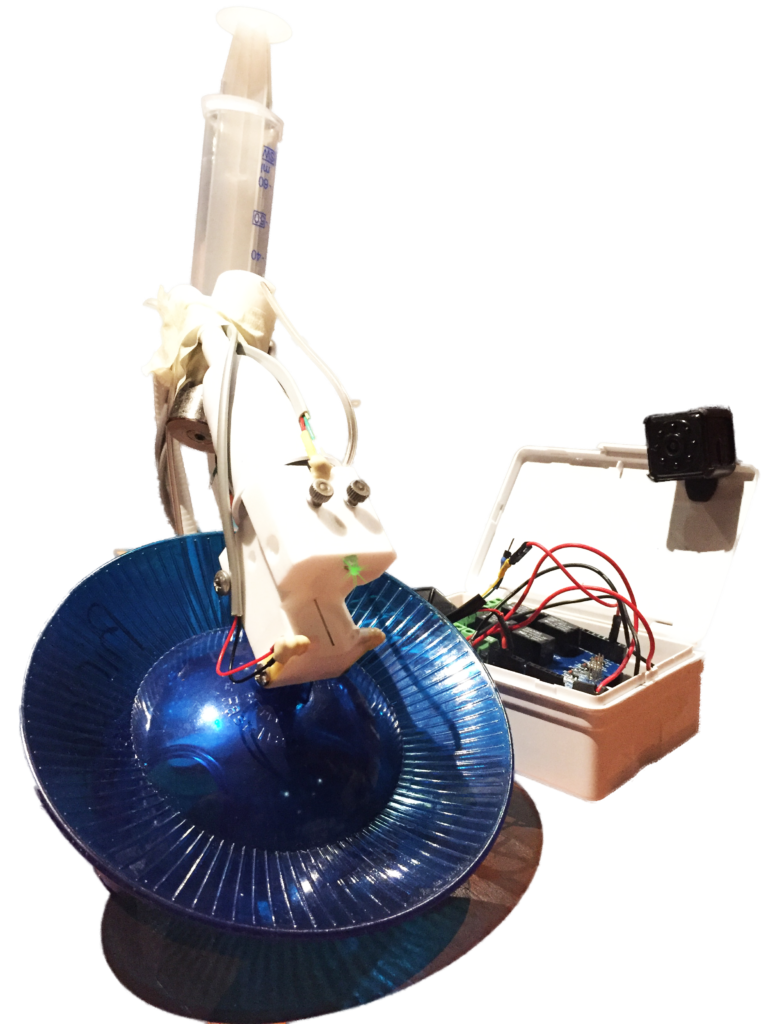

Goals: We are focused on smell, taste, CNS nerve stimulating (touch) and proprioception for this experiment. We created an inhabitable instrument–the semi-submerged wireless human augmented reality zorb ball interface (See image + caption) — to measure sonic arousal in a spherical 360 degree sextanaural sound space.

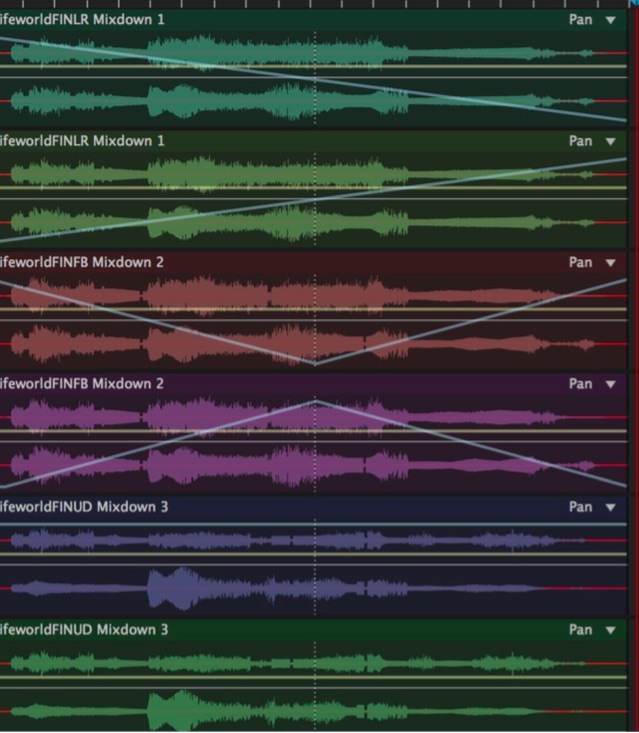

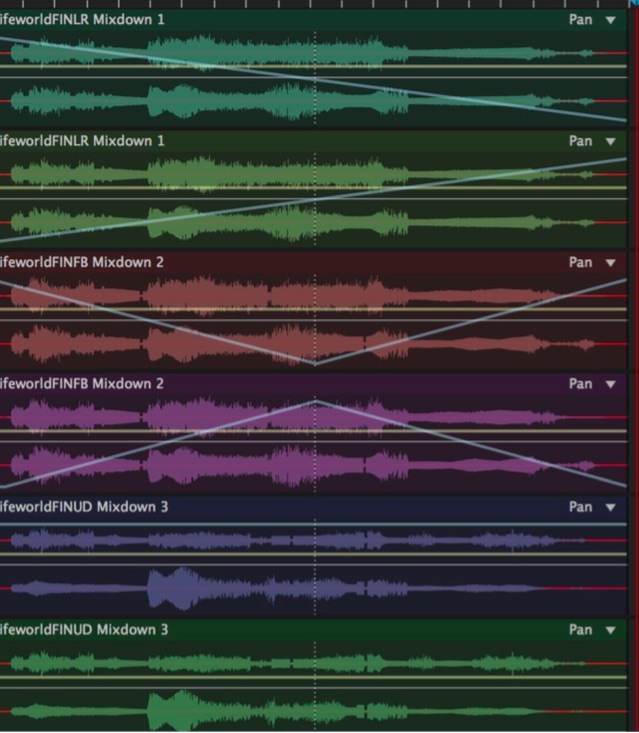

Materials: We created an inhabitable instrument — the semi-submerged wireless human augmented reality sextanaural zorb ball interface. This included a zorb ball and six waterproof portable MP3 players with six MicroSD cards synched to play our six channel compositions. We composed several six track digital audio compositions. These included six tracks to 6 speakers in the Zorb Ball (Front, Back, Up, Down, Left, Right), as well as experimenting with binaural standing waves in full six channel dimensionality (hence sextanaural sonic space) in a closed and yet N degree of cognitive freedom user movable space.

Materials: We created an inhabitable instrument — the semi-submerged wireless human augmented reality sextanaural zorb ball interface. This included a zorb ball and six waterproof portable MP3 players with six MicroSD cards synched to play our six channel compositions. We composed several six track digital audio compositions. These included six tracks to 6 speakers in the Zorb Ball (Front, Back, Up, Down, Left, Right), as well as experimenting with binaural standing waves in full six channel dimensionality (hence sextanaural sonic space) in a closed and yet N degree of cognitive freedom user movable space.

Methods: In order to transmit the sensual sounds of the island into a semi-submerged human augmented reality zorb ball interface we positioned the waterproof speakers: fore, aft, port, starboard, stern and bow. The six channel sonic compositions are available online mixed and as original six directionally distinct tracks. Made from generated, remashed and digital audio field recordings from the Koh Lon local island ecosphere soundscapes.

You can try this yourself! “Music For Zorb Balls” is the result of a Digital Naturalism Conference Project, ImmerSea: Subversive Submersibles. An experiment in sext-o-phonic sound (6-channel 3D omni-directional audio), “Music for Zorb Balls” documents audio tracks created for an immersive media-tastic soundscape while in an inflatable bubble on water. For our purposes, we assigned each directional track to six separate wireless Bluetooth speakers attached to points inside the Zorb ball. The audio can be streamed through any audio-playing device: iPhones, iPods, iPads, iAmASpeaker, Car Stereo Speakers, etc. Try duct taping pillows to friends and slipping their phones on loop into the pillow cases and then modern dance moshing with them while connected to their feet and hands with industrial rubber bands. This may give you the interactive UDX experience that it takes to feel the interface.

The album is free for public access and includes each artist’s contribution to the interactive sound installation. For public replication of this experiment, download each directionally determined track and assign each to individual speaker outputs for the best, most disorienting experience.

The album is free for public access and includes each artist’s contribution to the interactive sound installation. For public replication of this experiment, download each directionally determined track and assign each to individual speaker outputs for the best, most disorienting experience.

To download the full album from Bandcamp, select “Buy the Full Digital Album” but rest assured, the album and all tracks are free! Name your price at $0 (or more!). To download only specific tracks from the album, select the track(s) of choice and select “Buy Digital Track”. FREE. Enjoy!

https://immerseadinacon.bandcamp.com/album/music-for-zorb-balls

The system of zorb-bodies conformed to the human hamster wheel XYZ audio architechture simplot. Our research subjects were floating spherical on the waves of an immersive interface. This six channel Zorb space is semi submerged with user controlled motion and multi sense intensities. This is implicit in most full immersion media heavy, seafaring globes of transparent inhabitation. Our interest in binaural sounds stemmed directly from researching the CIA funded Monroe Institute[4] declassified documents.

Results:

“Being a primary experiment subject contained within the Zorb Ball fulfilled many fetal fantasies of being inside of a techNO-FEAR PROTECTO-SPHERE. Surprisingly, this enclosed experience externalized dissociation in a most dreamy, oxygen-deprived way. The sun beaming down on the Andaman Sea quickly steamed the dream bubble, creating a sauna effect. This, coupled with sonic bombardment from all directions (up, down, left, right, front, back) was immediately disorienting and yet, cacophonously comforting due to cultural familiarity with over-commercialization of experience. It was a clumsy kinetic learning-curve to walk on water, with big Jesus-like footsteps to fill. This tumbling was quite entertaining and meditatively exhausting due to the amount of energy exertion required to move an immeasurable amount in any direction. This cyclical going-nowhere and treading on water must be what hamsters and laborers trapped in capitalist loops feel like. In conclusion, this inverse rebirth was enlightening and recommended for anyone in search of an affixiatingly intense psychophysical re-alignment through mediated reprogramming.”

– Kira deCoudres.

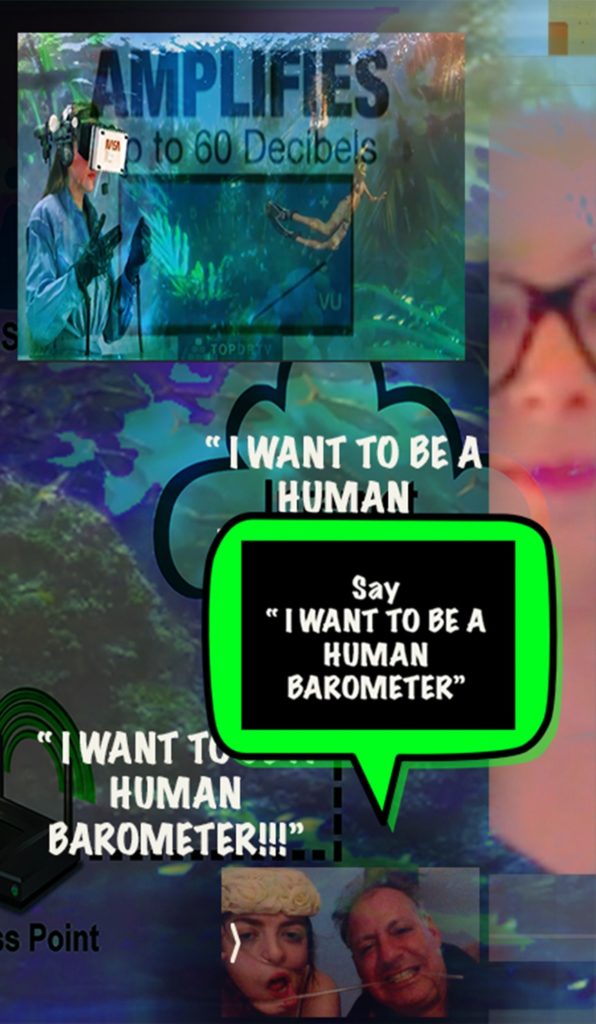

Future Research: We would like to experiment with waterproof, six channel, acoustic contact microphones that use streaming wifi. Live streaming from six sonic on-ground (or hydrophonic ‘in-water’) contact sites of interest would be fun and analog enough to have a warming mediatationed effect. With the right psychogeographically positioned field station, we could include instrumentation used to collect data that measures arousal, (for instance, a penile or clitoral plethysmograph, a electromyograph for rythym contraction data, Doppler velocimetry penis cuff/sleeve, etc). But there is a limit to the measurement devices available for five kingdoms wide, cross species real time arousal. These interspecies types of inclusive all-phylum arousal economies of fetish measurement devices can be prescient techno-predictors of both the chemistry and early reporter weather predictions[5]. Orgonomic coefficients coincident with ecological forecasting may yet prove that incorporating the sensual psychophysiology arts into climate change studies is a novel use value for our currently sparse interspecies, Kinseyesque, sexual response data and… correlated to climate change… we have even more reasons to cum[6].

Immersea Experiment Three: Gamification of Risk

Goals: This experiment is about what level of virtual overtaking of the ‘actual’ is possible by sudden and incremental alarming risk suggestion. We are looking at demonstrating extreme what ifs, neurotic paranoia and underwater fear factors to induce panic. This is to see if reliance on augmentation is such an addictive autopilot that users are capable of downgrading risk assessment or if danger as a concept can be subsumed by the multisensorial eye candy and responsive ISLIXR HIAR environments.

Materials: Zombie Apocalypse AR software, fake shark fin hat,fear itself and general tropical sea/mangrove/rain-forest fear mongering information sessions for players.

Methods: The intention is to compare notes on the reaction to panic attack inducing threatcasts, with or without augmented reality. Working with the generation of psychotic states or schizogenesis, we combine overlaying the audiovisual experiences of emergencies with actual dangerous or convivially able to be perceived of as dangerous environments such as the Andaman Sea. So, this is predominantly a genre of overlaid media to be explored while at risk of shark attack, drowning, unusual currents, poisonous fish, stinging jellyfish and sea gypsy pirates of the Malay Peninsula. Immersea experimental volunteers were subjected to a media barrage of: flashing lights, loud voices giving orders, mass media styled endless emergency warnings, faux shark attack and crashed, impeded glitch, audiovisual environments.

Results: Actual dangers encountered were: strong currents to nearly drown us, coconuts falling from the sky, jelly fish stinging and oxygen depletion in the Zorb ball as well as AR zombies everywhere. There was a distinct inability for the volunteers to conceptualize a difference between the Gamified Eye Candy and the emulation of mind control fascist depersonalization indoctrination cult tactics. It all seemed like an enjoyable and playful adventure, until the air started running out or the drowning feelings started. At that point, the virtual became secondary to survival, so there is still much to learn before we overcome that urge to stay alive outside of the MRMR XR.

Results: Actual dangers encountered were: strong currents to nearly drown us, coconuts falling from the sky, jelly fish stinging and oxygen depletion in the Zorb ball as well as AR zombies everywhere. There was a distinct inability for the volunteers to conceptualize a difference between the Gamified Eye Candy and the emulation of mind control fascist depersonalization indoctrination cult tactics. It all seemed like an enjoyable and playful adventure, until the air started running out or the drowning feelings started. At that point, the virtual became secondary to survival, so there is still much to learn before we overcome that urge to stay alive outside of the MRMR XR.

Future Research: The superimposition of a second, generated or algorithmically filtered version of your sense data accruement through your inborn and falsifiably inept orifice input-output economy may be safer than separating the two on competing screens (LCD/Flesh). Commercially available screens are brighter than the in-born filtered (i.e. eyes or nostrils) sense data of the everyday world. Interestingly, we found that digital LCD camera flowthrough screens that are not semi-transparent (like phones or tablets) may be of a higher bodily harm risk than mixed reality helmets or headgear. Semi-immersive gadgets are smaller, brighter, louder, more vibratory and more penile than immersive headgear or wearable environments. Comparing risk assessment artifacts and actual risk of the handheld gadget versus the wearable gadget may be worth looking into further. It does seem that any situation other than life endangering impact crashes or other forms of sudden, potentially fatal, mortal ruin are considered to be most often ignorable in or out of augmented reality. On the other hand, even long, drawn out, miserable pain itself can plausibly always be heightened by the digital.

A final Gamified Risk analysis involves the cognitive effect of the amplification of technological pain along with analog pain. This is a question of the rehabituation of illusory meta-pain in dialogical interplay with brute force. The competition between immersive digital torment and screen based digital torment in relation to bodily (technologically unattached) torment is a Department of Defense (DoD) issue worth pursuing[7]. Perception of risk is a fun and interesting area to analyze when comparing distinct media to the cognitive conception of the unmediated Central Nervous System (CNS). The real money is probably in how much poor risk assessment behavior can be coordinated through UDX AR MRMR DoD & ISLIXR CNS (see Terms Key below) as opposed to other kinds of mind control in terms of fully trained assets and glitching enemy assets.

Sustainable Mini Golf

Simply as a Central Node for ImmerSea: Subversive Submersibles at Dinacon, we spearheaded the first ever Sustainable Miniature Golf Course. Nearly on the equator, this might start an equatorial fad, especially with a Biodiversity Banking Theme!

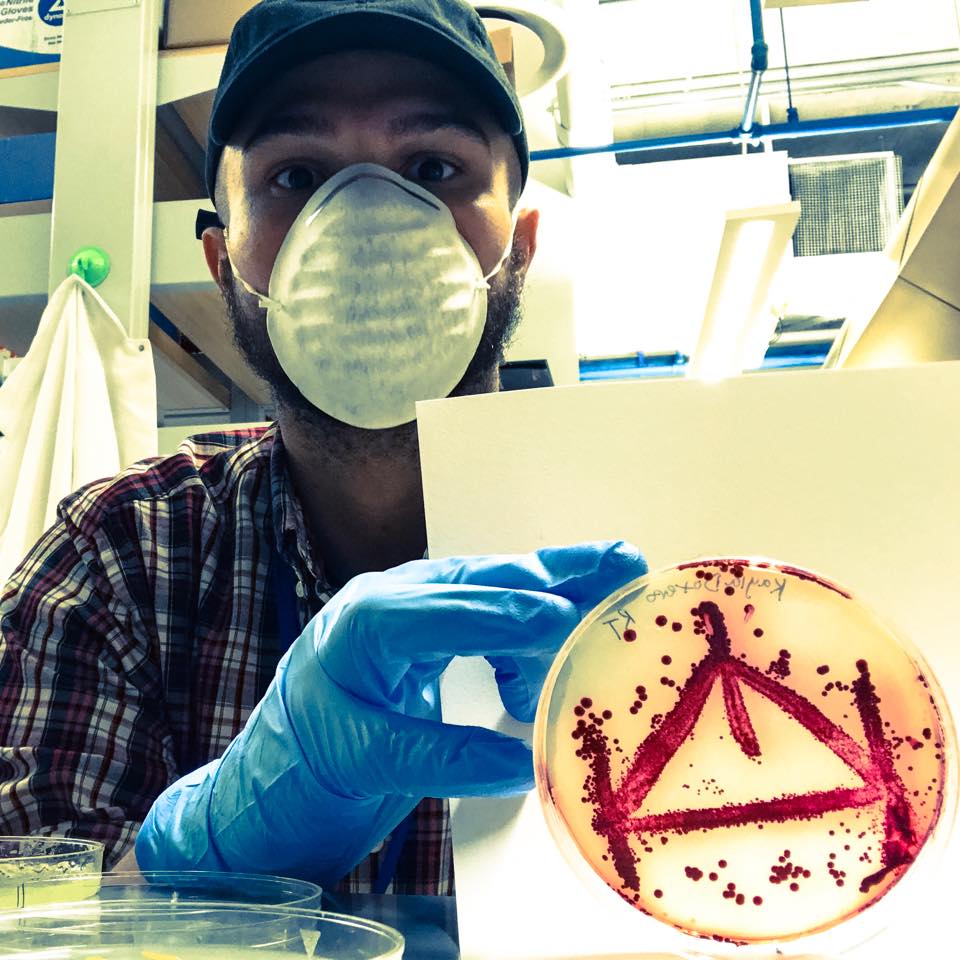

Miniature golf is great low-impact fun especially if it is sourced from local and sustainable materials. Our node hosted a social outpost made from crafted natural golf balls (baby casaba), DIY golf clubs (bamboo and brain coral and conch shells, tied together with coconut twine) and temporary redesign of loosely themed nature. Equatorial Sustainable Miniature Golf Courses are a perfect interface to teach-in about biodiversity, human gene editing and bioethics.

The ImmerSea: Subversive Submersibles Equatorial Biodiversity Bank Themed Sustainable Miniature Golf Course was a home base for our node as well as the source-mixing and wireless beaming for our remixed Immersea databank. Beginning with local Koh Lon mangrove, rainforest and coral reef biomes, this minimal impact portable eco-minigolf course houses the qualitative and quantitative ImmerSea: Subversive Submersibles library of biodiversity. The ImmerSea: Subversive Submersibles Equatorial Biodiversity Bank Themed Sustainable Miniature Golf Course is the AR-VJ immersive transmission station to our underwater ImmerSea: Subversive Submersibles Human Subjects Our video, audio, photo and written collection of ImmerSea: Subversive Submersibles biodiversity notes have been kept in a water proof lab book; notes and data available by request. We beam our tracks for output into the ImmerSea: Subversive Submersibles Biodiversity Bank Themed Sustainable Miniature Golf Library which functions as a VJ home base for UDX AR MRMR ISLIXR underwater and semi-submerged transmissions.

Let’s talk about how the genes for the natural excesses of exuberant traits in the tropics might be pasted into the human genome. The Public Lab Book includes a tabulation and interpretation of Natural Excesses, Exuberant Traits and BioBanks for Future Human/Nonhuman Germline Hybrid Experiments in Human Inherited Genetic Modification. What do you think the range of possible future bodies is? Which GMO humans should be included in the Transgenic Human Genome Project (THGP)? What can the biodiversity of Koh Lon and the Andaman Sea tell us about the range of potentials, enhancements and options for future human anatomies?

Future of Artistic Research on the Interspecies Physiology of Immersive ArtSci Environments

Future of Artistic Research on the Interspecies Physiology of Immersive ArtSci Environments

This is an emotionally deep research process compared to the usual data recording pilot studies. Instead of using the general facial recognition software, eye tracking, blink assessment and live cortisol level monitoring (without a funding dollar spent on gestural, full embodied or any lower body orificial economy explorations), We would like to propose artistic research to amass fuller than standard physiological data spacescapings. Contiguous with our biomediated-popsyche-ecosensual experiential developmental theme, we are striving for an all-body-portal accumulative database. Future research will include anal-tensegrity monitoring as a part of any full physiological observation of our (w)hole organism [assuming this is an organism with an anus]. Along with gene expression patterning over time (including multi generational epigenetic environmental effects) and further collection of tangible and intangible objects from our subcognitive focus groups, we intend to use AI to data mine our UX bioinformatics data swarms to provide further MRMR iterative, permutative and pseudo random artistic nodes for future studies in Artistic Research on the Interspecies Physiology of Immersive DINAOSPLA ISLIXR HIAR ArtSci Environments.

TERMS KEY:

- AV = Audio Visual

- AR = Augmented Reality

- HIAR = Hydro Immersive Augmented Reality (HIAR)

- VR = Virtual Reality

- VJ= Video Jockey

- XR = Extended Reality

- ISLIXR = instaSnap Limited Interactivity Extended Reality

- UX = User Experience

- UDX = User Deprogramming Experience

- DCCTG = DinaCon Cone of Tropical Geekdom

- UI = User Interface

- MR = Mixed Reality

- MRMR = Mashup Remix Mixed Reality

- DoD CNS = Department of Defense Central Nervous System

- DINAOSPLA = Digital Naturalism Open Source Public Lab Aesthetics

- A/d = art over data ratio

Thanks to Andy and Tasneem for all the organizational skills and joy of DINACON. We did independent DIY research and fun, wild, hacking together with resultant novel instrumentation for alternate realities. Thanks also to Pat Pataranutaporn and Werasak Surareungchai of the Freak Lab https://freaklab.org KMUTT for generous support and for hosting us to review, presentation and exhibition of our Artistic Research on the Interspecies Physiology of Immersive ArtSci Environments through Eye Candy Disruptor, EcoSensual Synesthesia and Gamification of Risk. Additional thanks to Tentacles Gallery, Bangkok, Thailand. Thanks to Praba Pilar for designing the initial Underwater AR prototype at our Woodstock gridfree residency in preparation for the NO!!!BOT performances at Grace Space in NYC, May 2018. https://www.prabapilar.com/events/nobotnyc

[1] the 3D AR software Giphy World https://giphy.com/apps/giphyworld

[2] ARZombi iOS11/ARKit game https://www.arzombigame.com/

[3] World Brush https://worldbrush.net/

[4] for instance Binaural Beats and the Regulation of Arousal Levels by F. Holmes Atwater, BA, (Hemi-Sync Journal, Vol. I, Nos. 1 & 2, Winter-Spring 2009) https://www.monroeinstitute.org/article/3002 More information: The Monroe Institute, Binaural PTSD recovery and Astral Projection for military remote viewing and CIA mind Control. P.O. Box 505, Lovingston, VA 22949. Founded and directed by Robert Monroe from 1974 until his death in1995, the Institute held classified contracts with the U.S. Army Intelligence & Security Command (INSCOM) on orders by Gen. Albert Stubblebine. The Institute studied their hemi-synch techniques to see if they could enhance soldiers’ performance and concentration. (Emerson, Steven, Secret Warriors, G.P. Putnam’s Sons, 1988, pg 103-4) The primary area of research at the Monroe Institute involves using a binaural beat to cause different psychological effects. A binaural beat is created by using stereo headphones with each speaker emitting a slightly different frequency. The result is a tone at the frequency between the two, which allegedly causes the brain to “entrain” on the frequency, i.e. the brain waves regulate themselves to the same frequency.The National Research council evaluated the Institute’s claims that the method could be used to improve learning. (National Research Council, Enhancing Human Performance, National Academy of Sciences, 1988, pg 111-4) “..located near Charlottesville, Virginia. Bob Monroe, author of many books on Out of Body experiences, has long and close ties with the C.I.A. James Monroe, Bob’s father, if I’m not mistaken, was involved with the Human Ecology Society, a C.I.A. front organization of the late 50’s and 60’s. The Monroe Institute has done research on accelerated learning and foreign language learning through the use of altered states of consciousness for the C.I.A. and other government organizations. Government interest in the more radical research going on at the institute remains only tantalizing speculation. Official classified document storage boxes have been seen at their mail-order outlet located in Lovingston, VA.” – Porter, Tom, Government Research into ESP & Mind Control, March, 1996 THE MONROE INSTITUTE S HEMISYNC PROCESS – Document Type: CREST Collection: STARGATE Declassified: December 4, 1998 CIA-RDP96-00788R001700210004-8.pdf Approved For Release 2003/09/10 : CIA-RDP96-00788R00170021000THE MONROE INSTITUTE’S HEMISYNC PROCESS “Hemisync is a patented auditory guidance system which is said to employ the use of sound pulses to induce a frequency following response (FFR) in the human brain. It is reported that the Hemisync process can heighten selected awareness and performance while creating a relaxed state. Hemisync is more than this however, and an extensive evaluation is warranted.

[5] This is why we are seeking an antianthropocentric, anthropo-scenic ecologist/ meteorologist device developer to join our team. Please contact vastalschool@gmail.com if you are interested in applying.

[6] See: http://sexecology.org/ , https://www.fuckforforest.org

[7] Neurological Manifestations Among US Government Personnel Reporting Directional Audible and Sensory Phenomena in Havana, Cuba, Randel L. Swanson II, DO, PhD1,2; Stephen Hampton, MD1,2; Judith Green-McKenzie, MD, MPH2,3; et al